You can find detailed observations about the uncertain and opaque (Scout) operation block in the natural environment of the Behavior Controller in the game in this guide.

Scout Behavior

If you’ve ever taken a passing glance at the Behavior Controller programming UI, you may have noticed a block under the [Move] category called [Scout]. “That’s nice,” you think foolishly to yourself, “They’ve included a simple instruction to explore unmapped areas. This will be both easy and convenient.”

Narrator: But it was all problemo.

Four hours of frustration, 40 ruined robots, and roughly a hundred re-loaded autosaves later, you give up on attempting to reconcile the simple command with its baffling behavior. And that’s how we found ourselves here; me writing, and you reading.

I, too, became incensed at the [Scout] command’s utter lack of self-preservation. So, I did some SCIENCE!

The Issue

At the core of all problems with the [Scout] instruction is a simple fact: the Devs have made the instruction a Black Box. (From Wikipedia: In science, computing, and engineering, a black box is a system which can be viewed in terms of its inputs and outputs (or transfer characteristics), without any knowledge of its internal workings. Its implementation is “opaque”.)

Once the Behavior Controller reaches and executes the [Scout] block, your robot’s fate is sealed. The robot will lock on to a seemingly arbitrary point nearby and drive blindly through Bugs, Blight, and Broken robots to reach it. No clever coding or screaming sensors will dissuade it from its goal; not Radar, nor Signal Readers, nor [Get HP] switches are capable of interrupting this impenetrable instruction prematurely. This is, in professional terminology, bad.

The only, only peek we’re allowed into this twisted octopus of obscured code is by turning on the game’s “Show Paths (J)” setting.

This changes the [Scout] command from being completely opaque to being more of a “read-only” operation; whilst we still cannot see or affecthow the decision is made, we can see what that decision is. And from there, we can begin to see patterns.

Let’s Get The Facts

To make [Scout]’s otherwise erratic behavior easier to study in detail, I chose to create a simple program that would (ideally) map the command’s chosen coordinates with as little outside interference as possible. After a few hours of trial-and-error, I settled on this:

Summarized, the robot will:

1) Navigate directly to a pre-defined starting coordinate.

2) Place a Beacon upon arrival.

3) Execute the [Scout] instruction exactly one time.

4) Repeat steps 2 + 3 until countermanded by the Player.

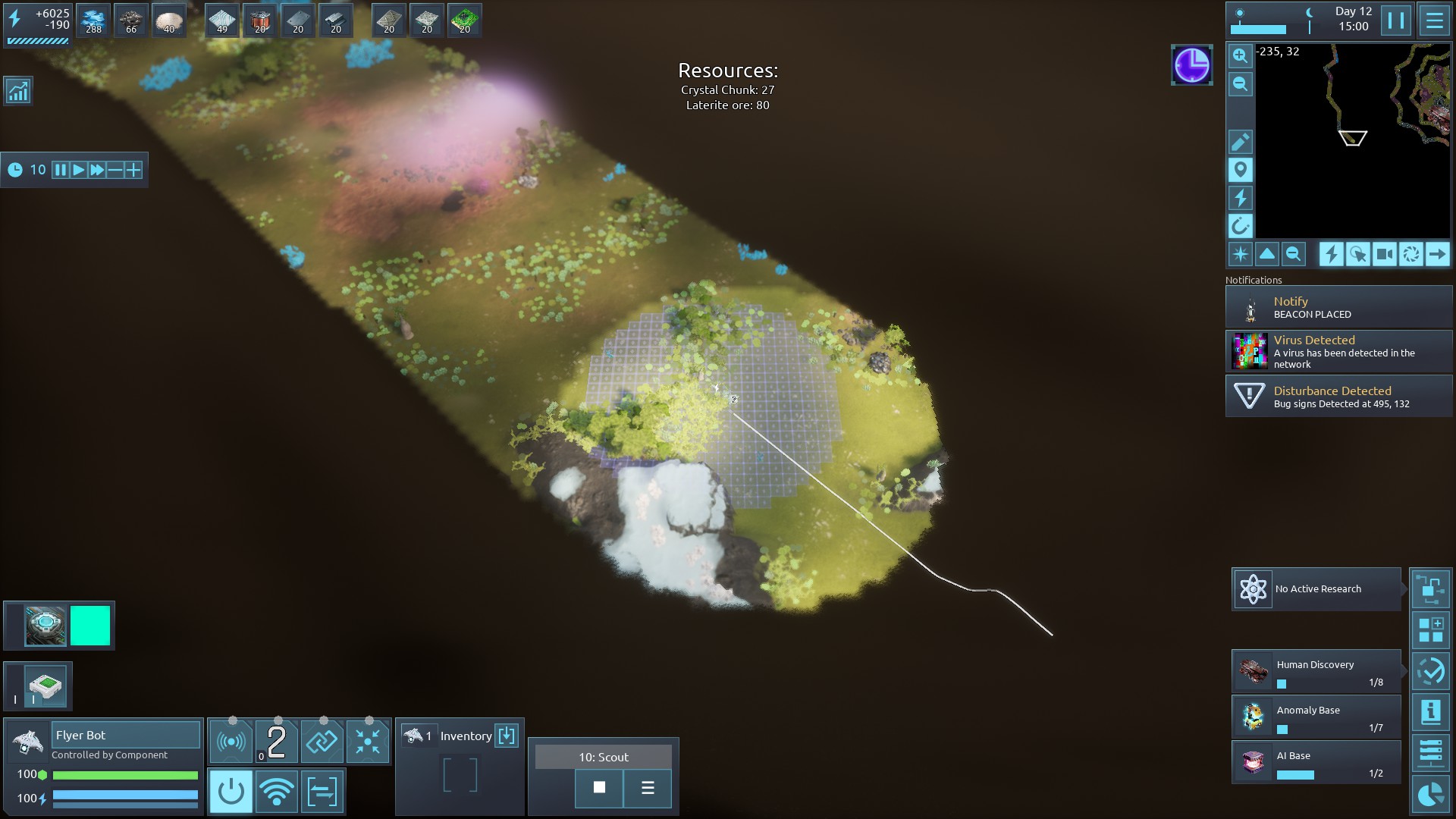

To ensure we study only the chosen coordinates, and are unburdened by being forced to navigate around a Plateau or body of water, the Scouting robot will need to fly. Our first issue: flying robots have only a single Internal Component socket; they cannot carry a Behavior Controller and a power supply. The smart, simple solution would be to use Power Transmitters to fuel the thing while it carries the program. I, however, am neither smart nor simple (and also it was like, 3am and I wasn’t thinking).

So instead, I decided to have a second flying unit follow the Scouting unit, providing power and, more importantly, Virus protection. (One of the few things I’ve discovered that can interfere with the [Scout] operation (aside from the robot being destroyed, ofc) is the robot in question being abruptly turned off. Since the code will not continue until the robot reaches its destination, and a turned-off robot cannot move, the Behavior Controller will never reach a command to turn the robot back on, leaving it stuck in place forever.)

Utilizing an oversight where an Internal Drone Port can be swapped for another Component while the docked Drone is performing a task elsewhere, I can have a Transport Drone (they’re fast enough to keep pace with the Flying robot) follow another unit without having to set its GoTo Register.

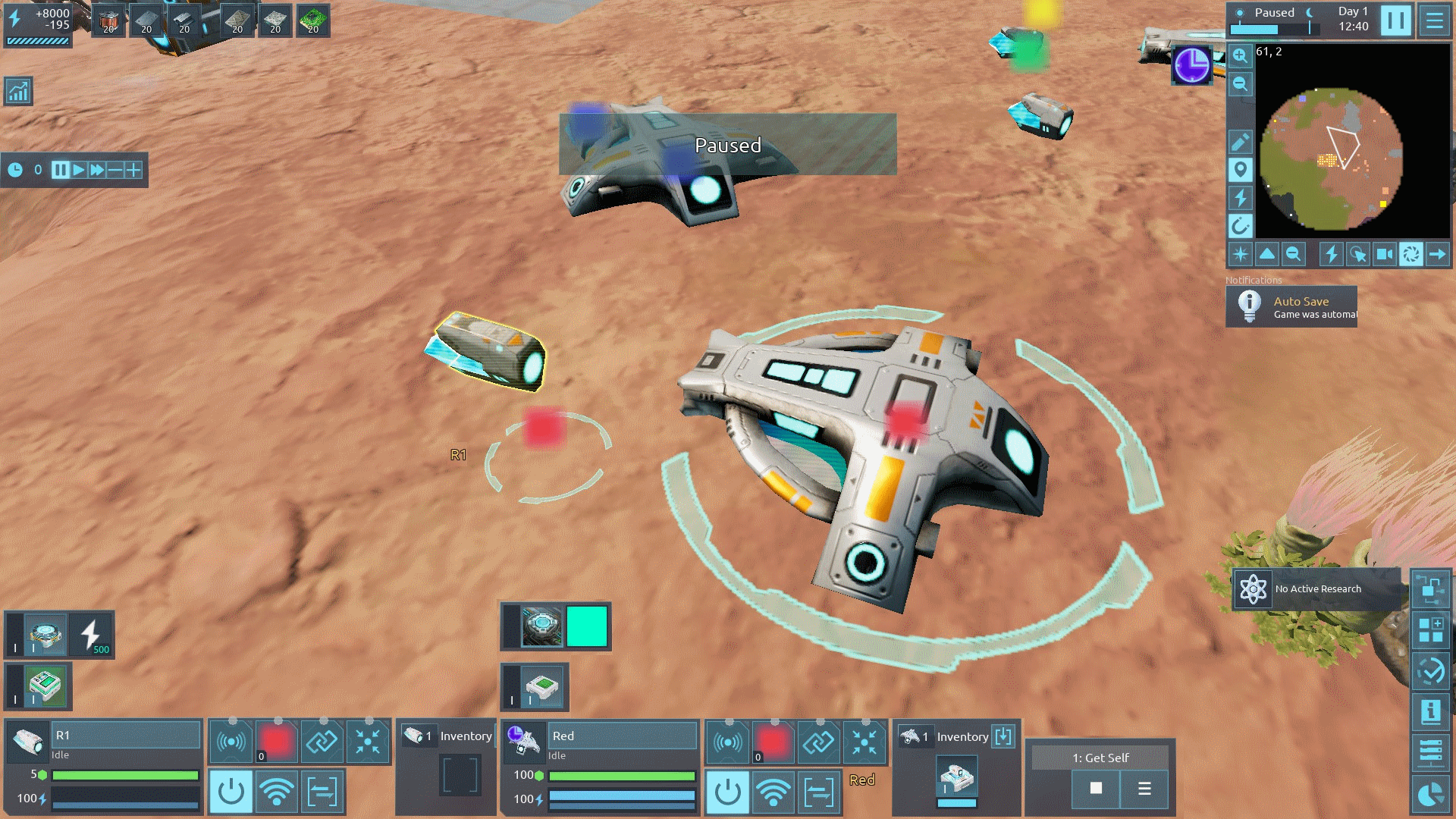

These were the robots chosen to perform this experiment:

Results & Analysis

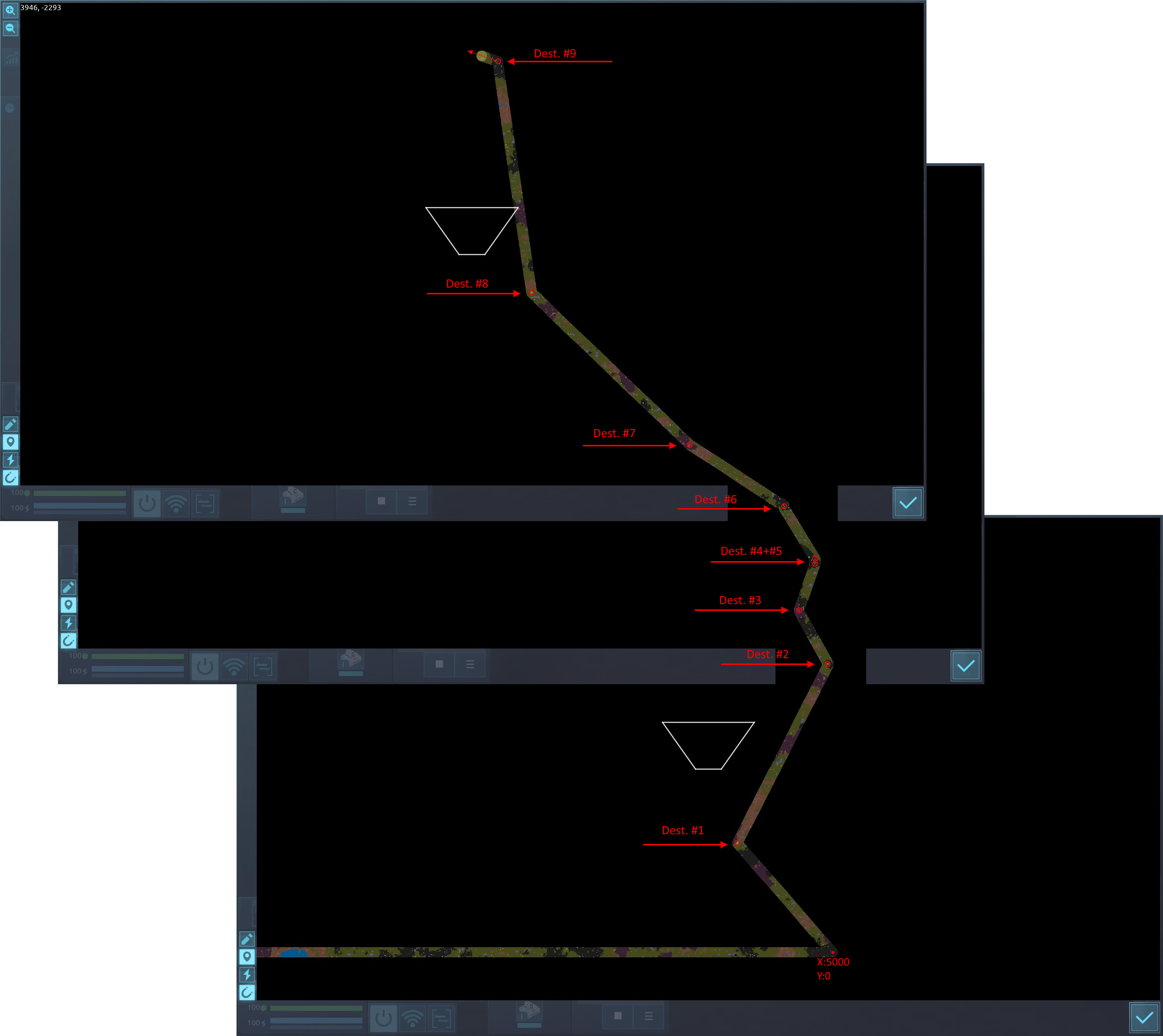

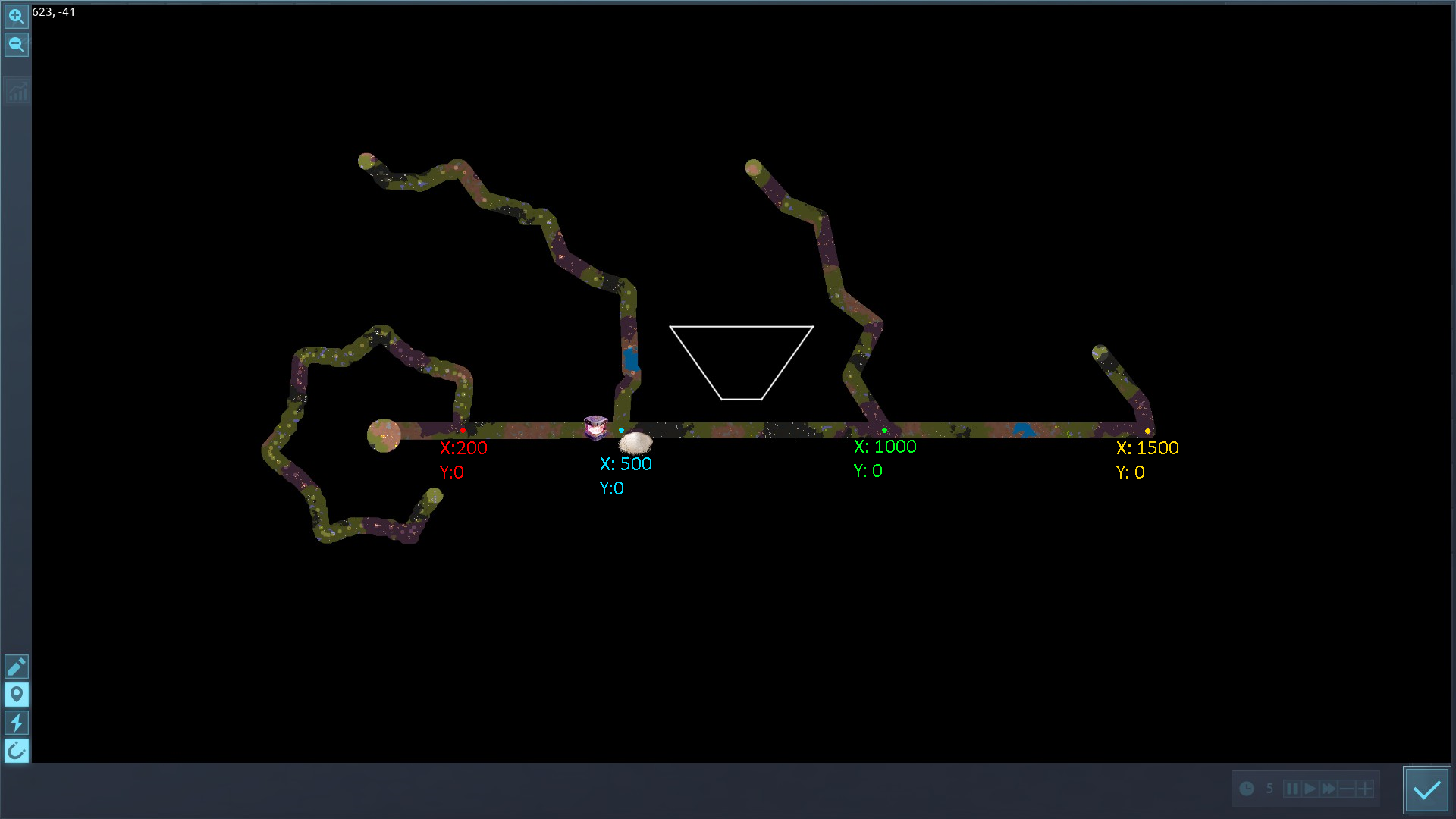

I chose to run four experiments in parallel, with each Scout being given a starting location progressively farther from the Base, in a single direction (in this case, East).

The units Red, Blue, Green, and Yellow were sent to begin [Scout]-ing at (200,0), (500,0), (1000,0), and (1500,0), respectively.

And already we can see some stark differences in their otherwise similar behavior.

The first thing we notice is that the [Scout] command consistently directs the robots in an anti-clockwise direction, concentric to the Base.

(Ignore the fact that I started all four robots near the Base, and therefore Red has almost completed a full lap while Yellow is only just getting started.)

We can also see that the robots do not transcribe a neat circle; while Blue and Green are making jagged progress, Red has taken the Spirograph approach and made a jaunty, swooping path.

It also isn’t actually a circle.

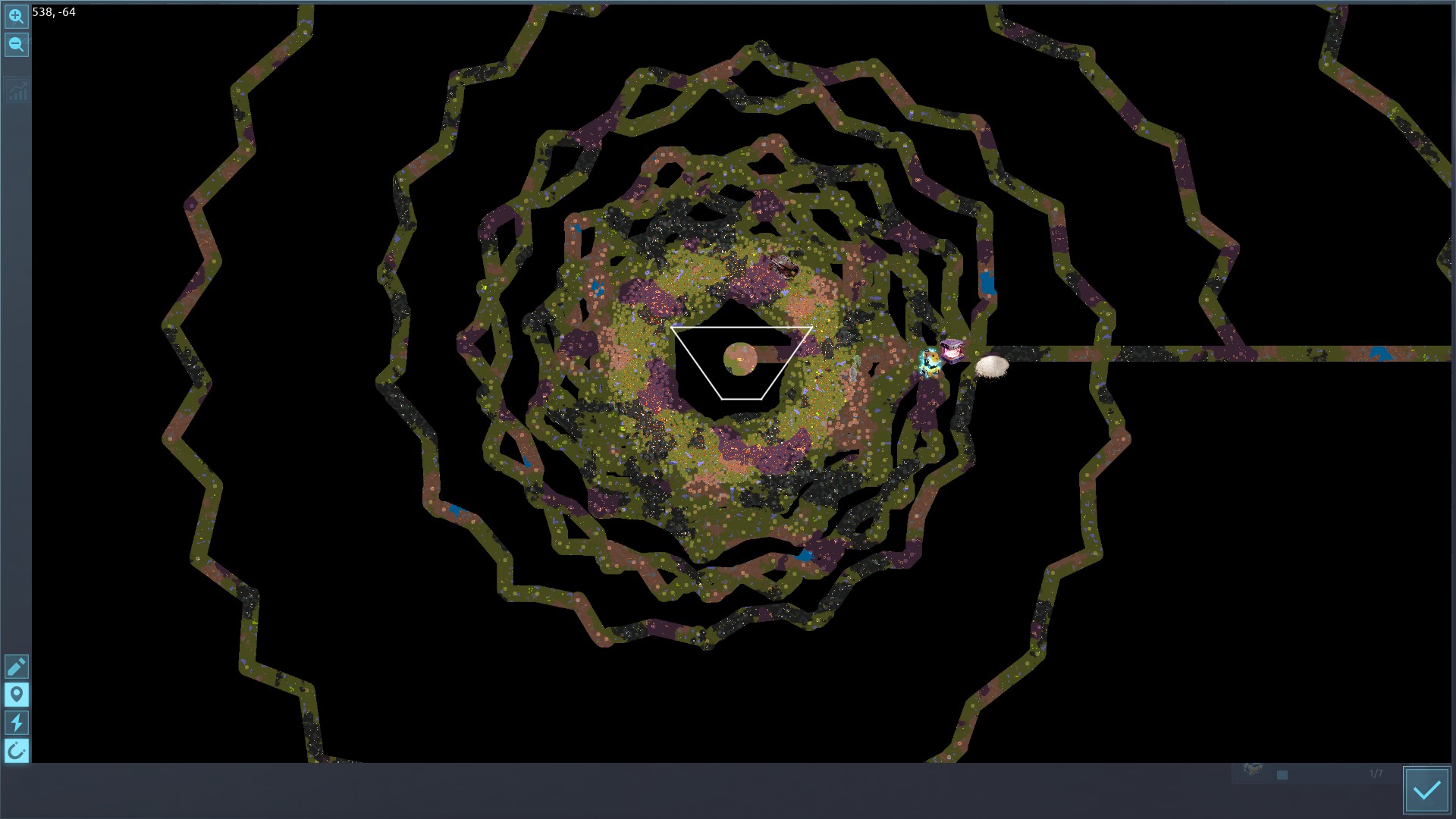

Yes, for whatever reason, [Scout] directs the robot in a closing spiral, with the curve becoming shallower as it nears the center, and becoming arbitrarily small ~150sq away from the Base.

The number and spread of destination coordinates also changes with respect to the [Scout]-ed radius.

Red placed an astounding 64 Beacons on its first lap, while Blue, its closest neighbor, placed 76 Beacons; a mere 12 more for covering a much longer path. And although Yellow‘s complete path is not visible in this screenshot, the 31 Beacons we can see suggest a much lower total than either of the inner two. (With how spread out Yellow‘s Beacons are, I’m reluctant to believe there are another 30+ hidden in the short arcs above and below the map edges.)

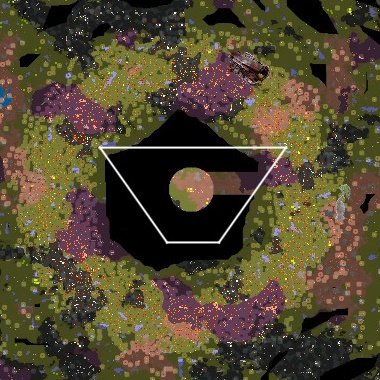

I chose to leave the experiment running while I did other things for ~20 minutes (at 5x speed), and came back to this. Note that while all four robots have joined Red in the Beacon Mosh Pit(tm), none of them have placed Beacons any closer than ~100sq from the Base at the center.

Final Thoughts

While this initial study might make the [Scout] block’s behavior a little more predictable, it is far from making it understood, manageable, or excusable. Any protection or detection required by the robot using the command can only be triggered at the beginning or end of the [Scout] path, and nowhere in-between.

This experiment was very bare-bones, and only covered behaviors >200sq from the Base; if I decide to repeat or expand the study, I would probably send robots in multiple directions and include at least one that begins [Scout]-ing immediately from the Base to determine what, if any, is the maximum natural radius of exploration.

Further study into the relation between sequential Beacon distance and the robot’s distance from the Base will also have to be conducted.

I have included a screenshot of the Seed and Settings that went into generating the world used, in case anybody wants to verify my results.

Also, please enjoy this composite image of a robot’s path when told to begin at (5000,0), annotated to highlight the extreme distances [Scout] selects as destinations as Dist-2-Base increases.